Designing for Safer Cars

Masters Thesis dissertation project developed in-collaboration with Aptiv LLC

Despite advances in vehicle sensor technology, the automotive industry has yet to fully exploit real-time, fused sensor data for enhancing in-vehicle user experiences. Our thesis project explores how to leverage these sensor data streams to improve safety and comfort for drivers and passengers. As vehicles become more sophisticated and sensor-rich, addressing this challenge is crucial for creating user-centric, seamless, and intuitive automotive experiences.

01

Problem Statement

The automotive industry has seen major advancements in vehicle sensor technology, yet the use of real-time, fused sensor data to enhance in-vehicle user experiences remains underexplored. The problem addressed in this thesis is how to leverage these vast streams of sensor data to improve both safety and comfort for drivers and passengers.

Can we really look to technology itself for a solution?

02

Research Process

User Interviews

We conducted interviews with drivers and automotive experts to uncover pain points in existing in-vehicle systems. Key insights from these conversations included the need for intuitive interfaces that minimize cognitive load and increase driver comfort. Data Analysis & Conclusion: The combined research identified gaps across these systems, such as the underutilization of sensor data for proactive user assistance, which became focal points for our design solutions. Our analysis revealed that while these interfaces have made significant strides in usability and feature integration, there's still room for improvement in leveraging real-time sensor data to enhance the user experience.

Literature Review

Our research explored existing academic and industry literature on multimodal interaction, sensor fusion, and automotive UX. We also conducted an in-depth analysis of leading infotainment systems, including Mercedes' MBUX, BMW's iDrive, Audi's MMI, Volkswagen's MIB, and the Android Automotive interface developed by Aptiv for Polestar and Volvo. This comprehensive review helped shape our understanding of current capabilities and informed our design approach.

Data Analysis & Conclusion of Initial Research

The collected data were analyzed to draw meaningful connections between user needs and sensor capabilities. From the user interviews, we found that real-time data should be used to inform adaptive and anticipatory user interfaces. Expert insights and existing research helped us to frame our design hypotheses and guide the development of prototypes that address both safety and comfort. The combined research identified gaps, such as the underutilization of sensor data for proactive user assistance, which became focal points for our design solutions.

This helped us narrow down our focus and formulate our 2 research questions:

Research Question 01

How can designers make better use of vehicle sensor data, to create more optimised vehicular user experiences?

Research Question 02

How can designers estimate the value-added, resulting from UX designs relying on vehicle sensor data, to the vehicular user experience?

03

Design Process

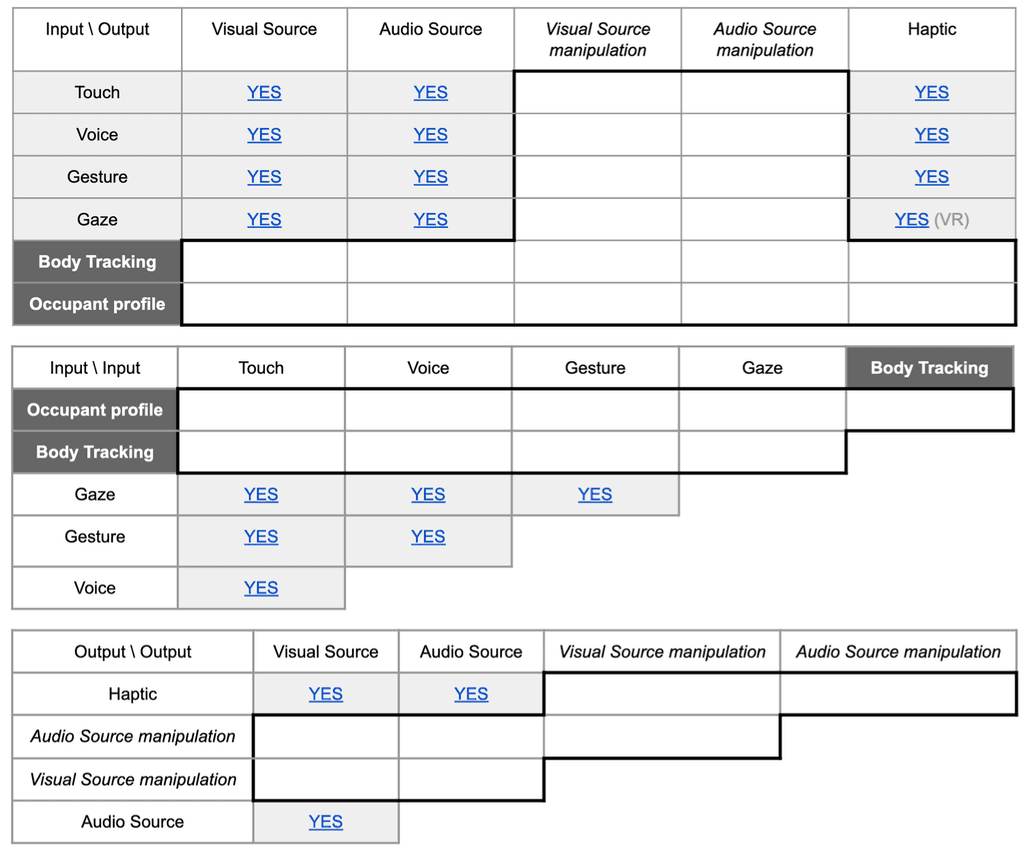

Ideation and Brainstorming

Based on the Initial Research, we built a table (see image below) charting the different input modalities (such as touch, voice, gesture, etc) on the Y-axis and mediums of output (visual, auditory, haptic, etc). The modalities selected were a collection of modalities that the proprietary ADAS platform utilized in our thesis project was capable of tracking/monitoring. We used co-design workshops and methods like ARSCI (Adding, Removing, Skewing, Combining, Imbalance) to generate multiple design concepts. This phase emphasized open exploration, leading to various ideas on how to transform raw sensor data into meaningful user experiences.

Using these methods, we generated 37 ideas formulated from 275 possible combinations.

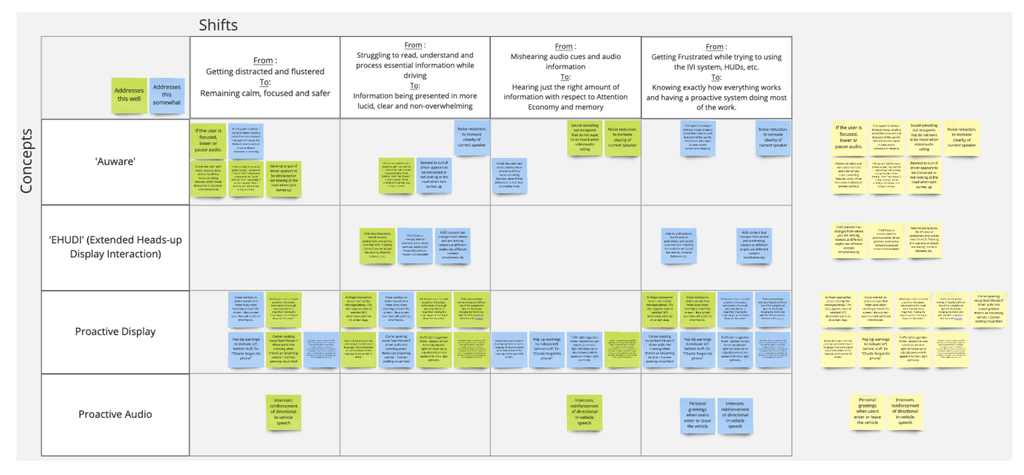

Theory of Change worksheet from IDEO (see below) was applied upon the final ideas.

The 6 ideas we were left with:

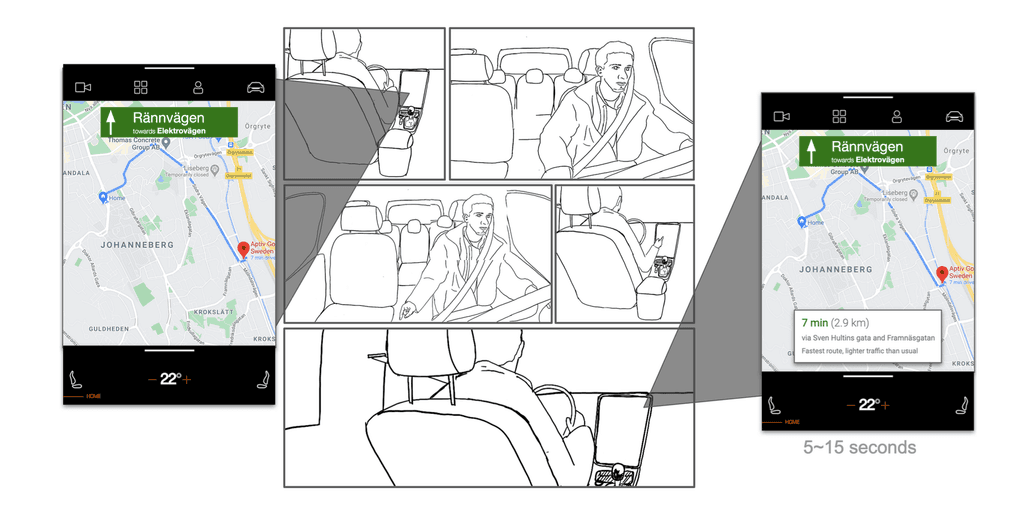

Sketches and Wireframes

01

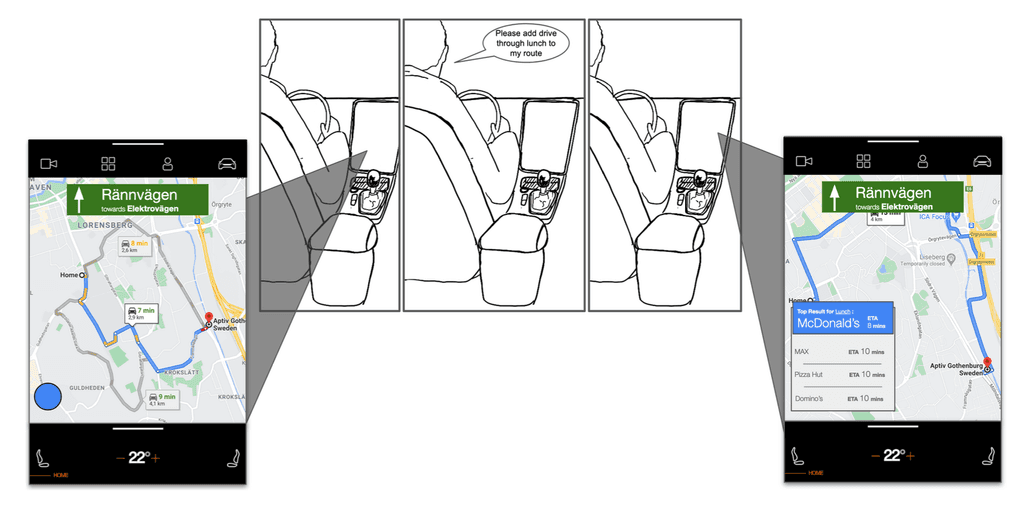

Add to route

Users ask for driving directions using their voice based on what they see around them.

Sensors Used: In-cabin mics, GPS, Infotainment display and Cameras on the front of the car.

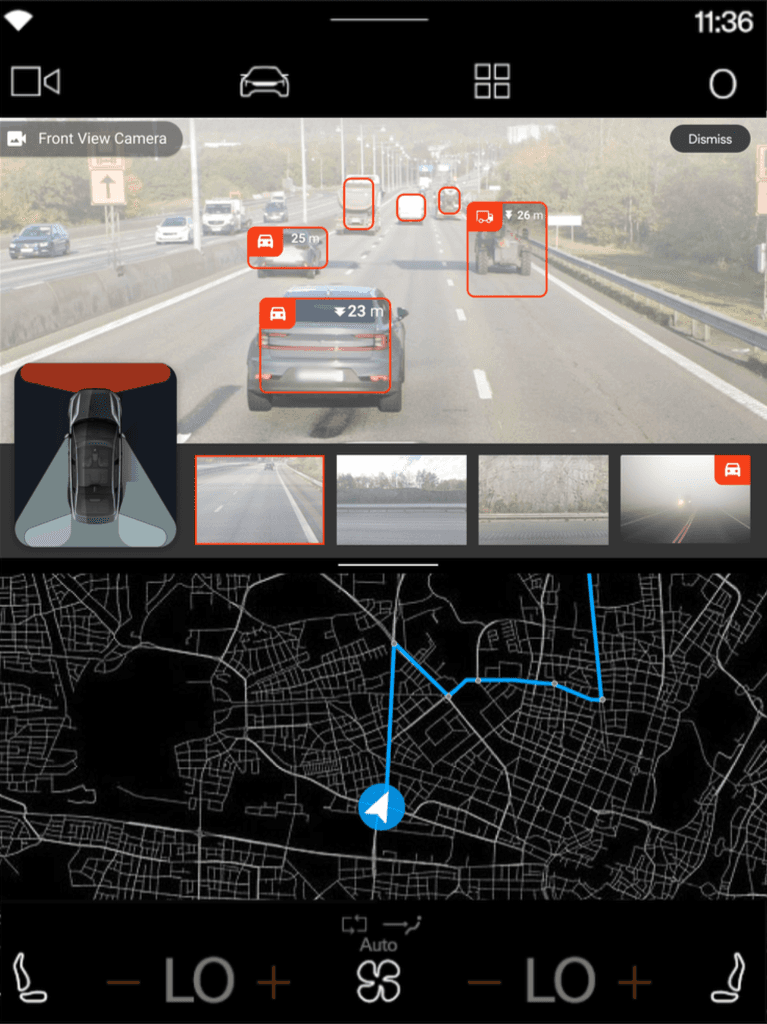

02

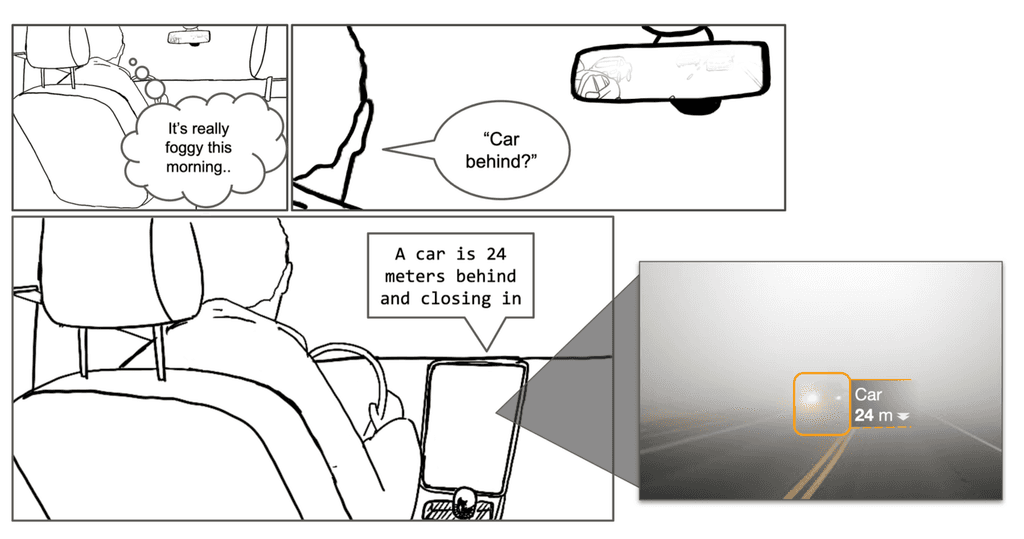

ADASight

Driver can ask or be shown if there is a vehicle behind or in front when in low visibility conditions.

Sensors Used: In-cabin mics, ADAS Radar array, Infotainment display and Cameras on the front and rear of the car.

03

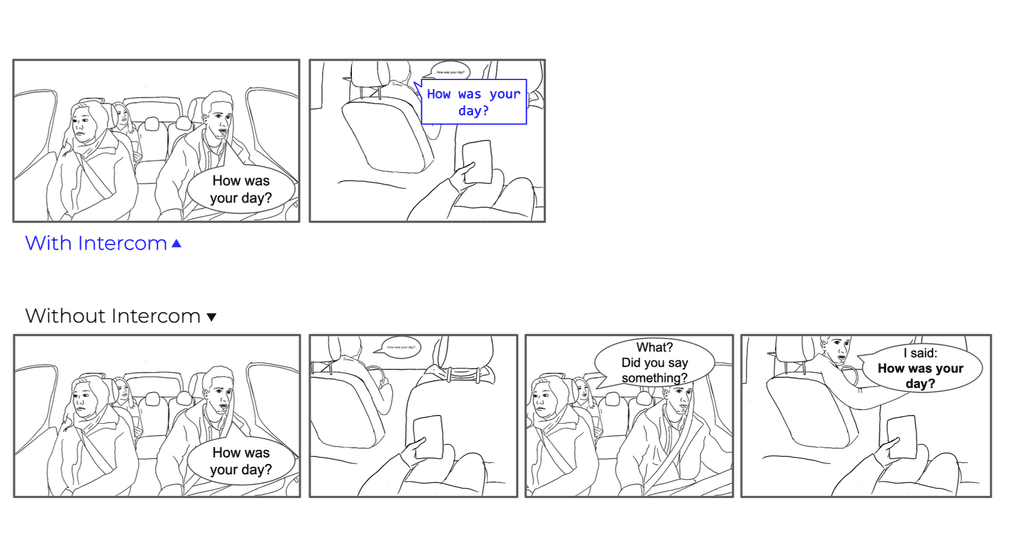

Intercom

Allows the occupants in the front of the vehicle to have conversation with the passengers in the rear by subtly enhancing their speech for volume & clarity of in-car conversations.

Sensors Used: In-cabin mics, Driver alertness cameras and in-cabin speakers.

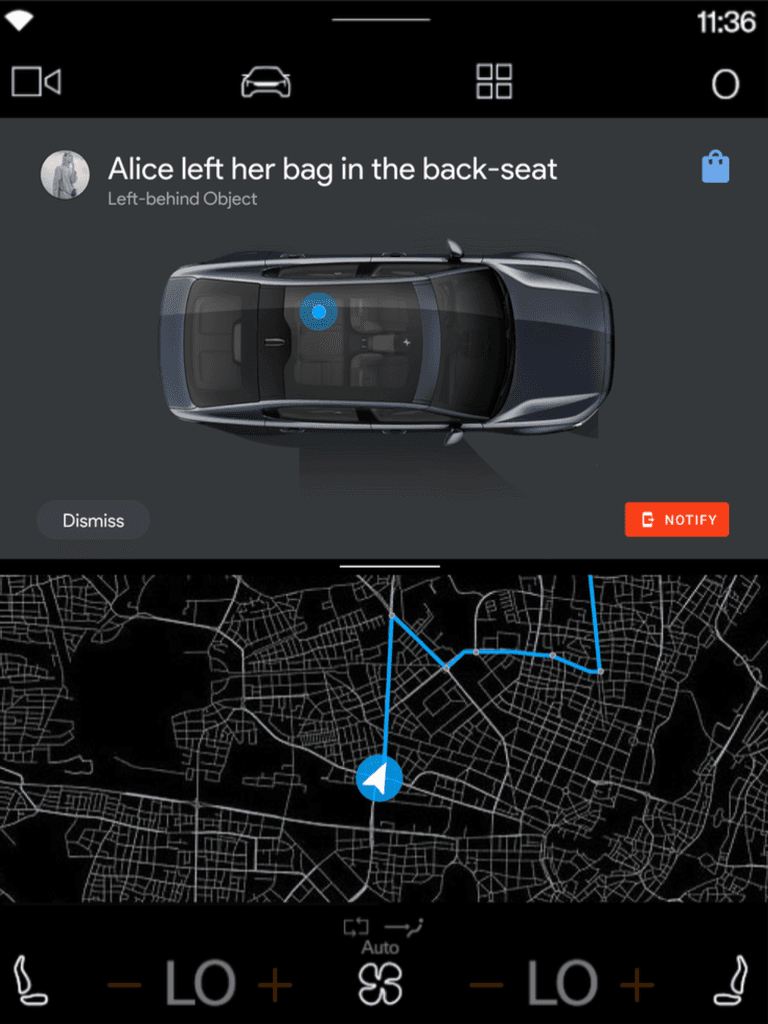

04

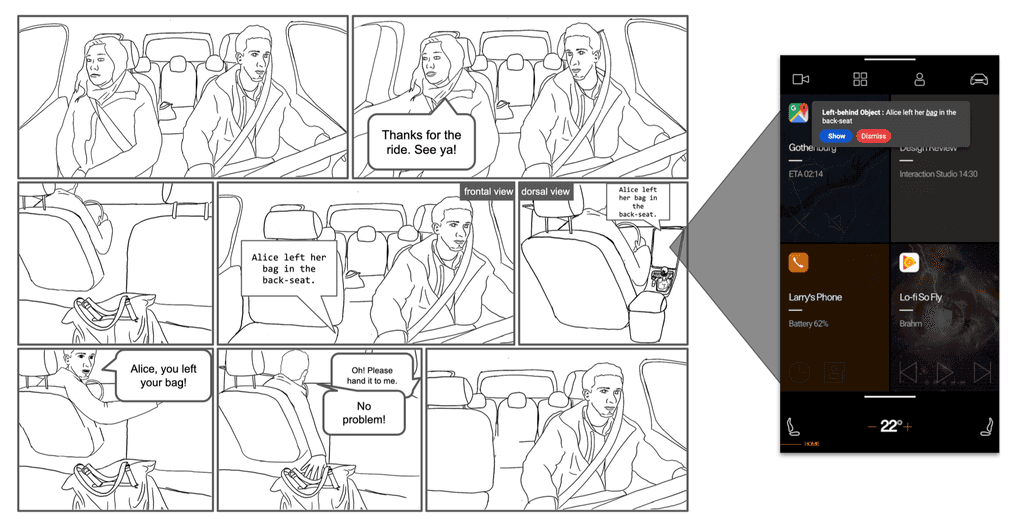

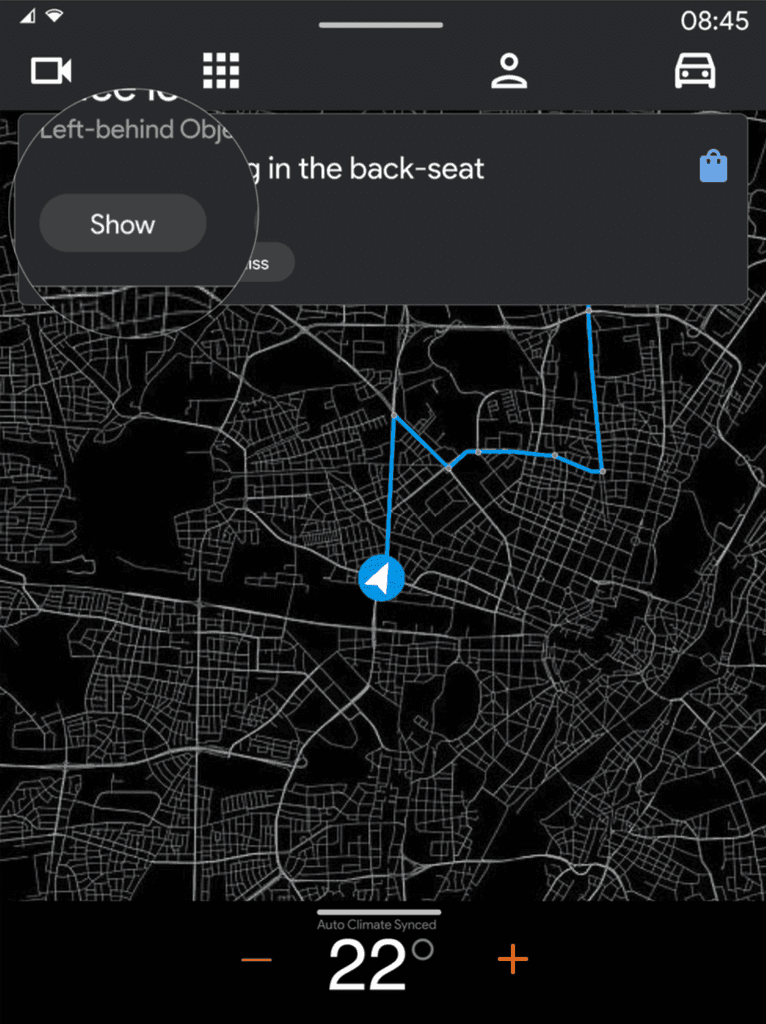

Left-behind object

Vehicular occupants are alerted if ’X’/someone leaves ’Y’/something (such as a bag) behind while exiting the vehicle.

Sensors Used: In-cabin speakers, Seat load sensors, Infotainment display and In-cabin cameras.

05

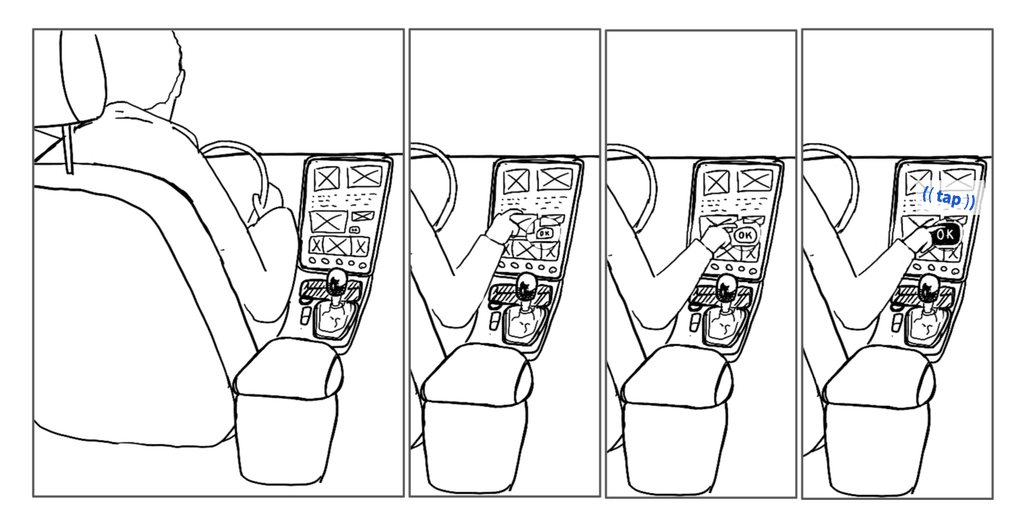

Magnification

Allows the occupants in the front of the vehicle to precisely hit smaller touch targets without directly looking at the screen while the vehicle is in motion.

Sensors Used: In-cabin Gesture radar, Driver alertness cameras and Infotainment display.

06

Tooltip

Preview your relevant information before you open an app.

Sensors Used: In-cabin Gesture radar, Driver alertness cameras and Infotainment display.

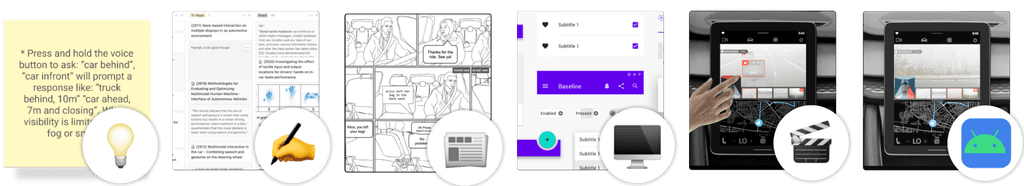

Prototype Fidelities

We then used the following steps for developing our ideas before presenting our final higher fidelity prototypes to our UX test volunteers.

From L to R, we started of with simple post-it ideas, wrote scenarios for storyboards, created mockups, showcased interactions in video prototypes, and finally realized the ideas as Android Apps using the softwares Kanzi and Android Studio.

Prototypes (Low and High Fidelity) We developed prototypes, starting with low-fidelity versions tested with users to gather early feedback. This evolved into high-fidelity, interactive mockups that were closer to the final product.

04

Final Design Solution

The Final prototypes that we tested with, were functional applications built for the Android Automotive platform using Kanzi and Android Studio. These Hi-Fi prototypes were also the deliverables for the company we were working with; Aptiv.

01

Left-behind object

Vehicular occupants are alerted if ’X’/someone leaves ’Y’/something (such as a bag) behind while exiting the vehicle.

SUS Score: 84.82 (out of 100 - higher is better)

DALI Score: 1.96 (out of 7 - lower is better)

02

ADASight

Vehicular occupants are alerted if ’X’/someone leaves ’Y’/something (such as a bag) behind while exiting the vehicle.

The feed selector in ADASight, the selected camera feed is highlighted in the burnt orange color, not selected ones in grey.

SUS Score: 70.89 (out of 100 - higher is better)

DALI Score: 3.89 (out of 7 - lower is better)

03

Magnification

Allows the occupants in the front of the vehicle to precisely hit smaller touch targets without directly looking at the screen while the vehicle is in motion.

SUS Score: 87.5 (out of 100 - higher is better)

05

Conclusion

This thesis project explored how real-time, fused vehicle sensor data can be leveraged to enhance multimodal in-vehicle experiences, focusing on safety and comfort. Through a combination of extensive research and iterative design processes, we developed innovative solutions that successfully demonstrated the potential of sensor-driven, adaptive interfaces.

Key results included measurable improvements in reducing cognitive load and increasing driver comfort, as validated through both qualitative and quantitative evaluations. The ARSCI method proved effective in generating creative and user-centered design concepts, while our prototypes showcased how proactive feedback and adaptive features could transform the in-car experience.

Despite challenges like data accuracy and system integration, the project provided valuable insights and laid the groundwork for future advancements in automotive interaction design.